Hong Kong News

How Hong Kong can play a role in preventing AI Armageddon

There has been a significant uptick of late in discussion on the topic of artificial intelligence and the threats it poses. There are several reasons for the heightened discussion, even if the recent headlines have been grabbed by the exhortations of Tesla founder Elon Musk and others to pause training of AI systems more powerful than ChatGPT-4 for at least six months.

With the emergence of the AI-powered chatbot ChatGPT – and alternatives like Jasper and YouChat – concerns have been raised about AI-generated images and the uncanny quality of the deepfakes, such as those of Pope Francis in a white Balenciaga puffer coat and of former US president Donald Trump in handcuffs.

When reality and fiction become indistinguishable in terms of images and sound, then we have a problem. This is because the power of such believable imagery and sound to affect and influence human opinion and behaviour is vast and the implications severe.

How will governments, institutions from banks to universities, and every sector that could be affected stay ahead of the curve in terms of identifying false imagery and documentation? Conversely, how can the public have faith that such capabilities might not also be used for political, financial or malign purposes by these same players?

In the academic domain, discussion is currently focused on ChatGPT and its use or non-use in universities, given that students could employ the technology to generate essays, for example. No consensus has yet emerged; there is a clear need for enforceable regulation across jurisdictions. It seems, though, that we are always playing catch-up with an AI ecosystem that is accelerating.

Perhaps in the future we will only be willing to trust images and sound generated via some uncrackable quantum code coupled with blockchain-like validity protocols, with everything else to be taken with a pinch of salt.

But the impressionable, paranoid, biased, less well educated, opinionated and religious can all be taken in, to various degrees, by AI put to pernicious use. This is not an insignificant fraction of society, as the unfolding disaster of Brexit in the United Kingdom makes only too clear (but not to some).

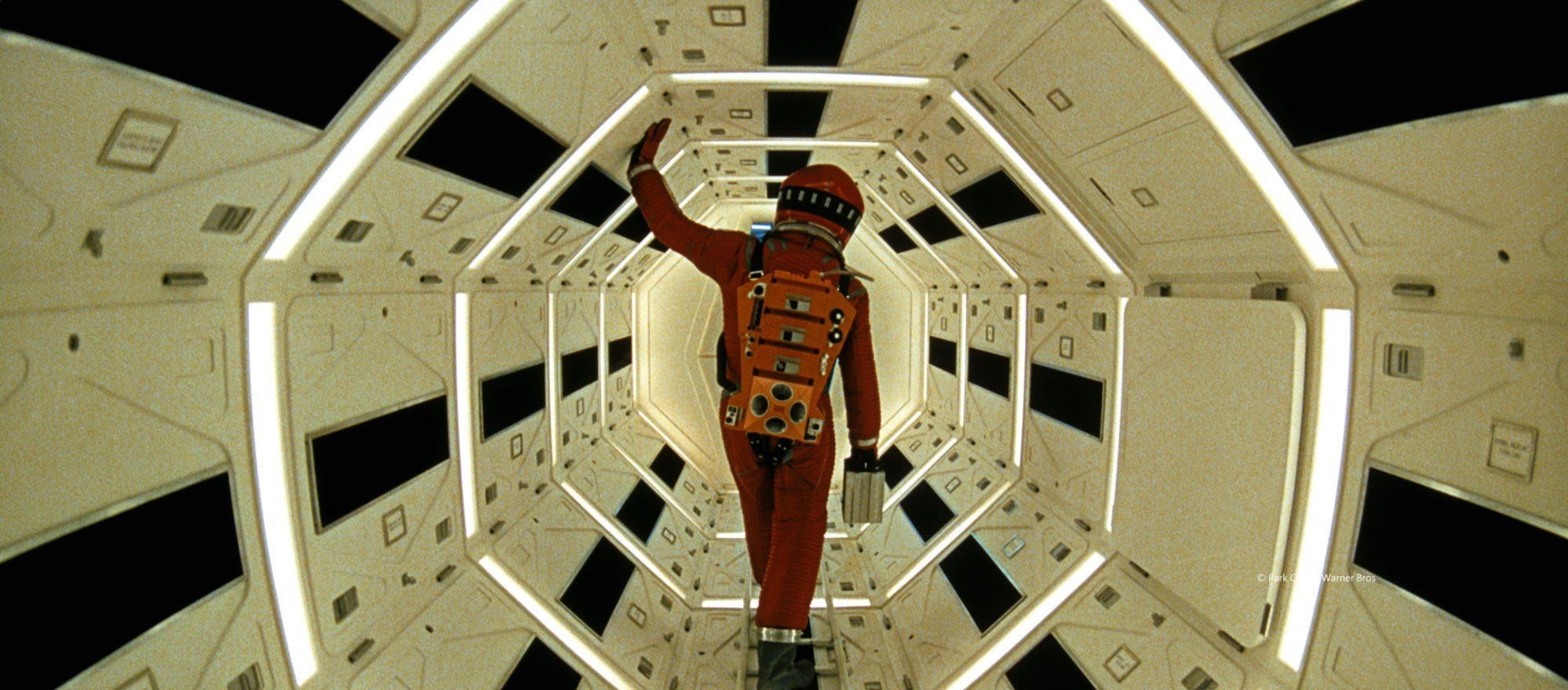

A still from 2001: A Space Odyssey. In the film, a spacecraft’s

computer, which has humanlike intelligence, begins to work against the

astronauts.

A still from 2001: A Space Odyssey. In the film, a spacecraft’s

computer, which has humanlike intelligence, begins to work against the

astronauts.

Of course, in popular culture, the perils and benefits of AI have played out on our screens over decades, in everything from the murderous AI computer HAL in Stanley Kubrick’ 2001 A Space Odyssey to Stephen Spielberg’s ultimately sad film A.I., released in 2001, to the more dystopian Matrix and Terminator franchises.

The potential dangers of uncontrolled AI have been made plain in such films and other media, and so have seeped into the public consciousness, but are the risks of AI Armageddon overblown?

To me, it eventually comes down not to whether I can get an AI app to write this article, but whether true AI – that not only becomes indistinguishable from human intelligence but rapidly surpasses it – is just around the corner. This is where the greatest threats to humanity may arise.

A simple thought experiment illustrates the point. If AI emerges in the near future as self-aware in the truest sense, and thereafter is able to learn and improve at an accelerated rate and can access and control everything connected to the Internet of Things upon which modern life depends, what might this mean? Would AI feel compelled to act for the “eventual greater good”? Could anyone stop it if it did?

A powerful AI assessing the current state of our fragile planet, the collapsing ecosystems and the damage human actions pose to nearly every other living thing might cause such an aware AI to reflect. It could conclude that the planet needs saving from the one organism responsible for what is happening. Perhaps an engineered pandemic would arise with a much higher mortality rate than that of 1Covid1-19.

A key point is that, as things stand, AI-related capabilities are emerging in a somewhat ad hoc way and at a rapidly increasing pace, with no real control or oversight. Some amazing new development occurs, has an immediate impact and when the implications of its capacities are realised, there are then calls to try to regulate it after the fact. This is dangerous as, once the true AI genie is out of the bottle, what could we do?

Of course, the world is increasingly multipolar and disjointed, and such coordinated control, though desirable, might be difficult to achieve in practice. But we should try.

In that vein, Hong Kong has some of the best universities in the world with leadership in fintech and data science, as exemplified by the recently established HKU Musketeers Institute of Data Science at the University of Hong Kong. There is also the Centre for Artificial Intelligence and Robotics under the Hong Kong Institute of Science and Innovation of the Chinese Academy of Sciences.

Finally, we also enjoy a trusted and impressive regulatory and compliance infrastructure. So Hong Kong has the potential to emerge as a global centre for regulatory oversight of AI technology. The world needs to move quickly to regulate advancements in AI, and perhaps Hong Kong can help show the way before it is too late.